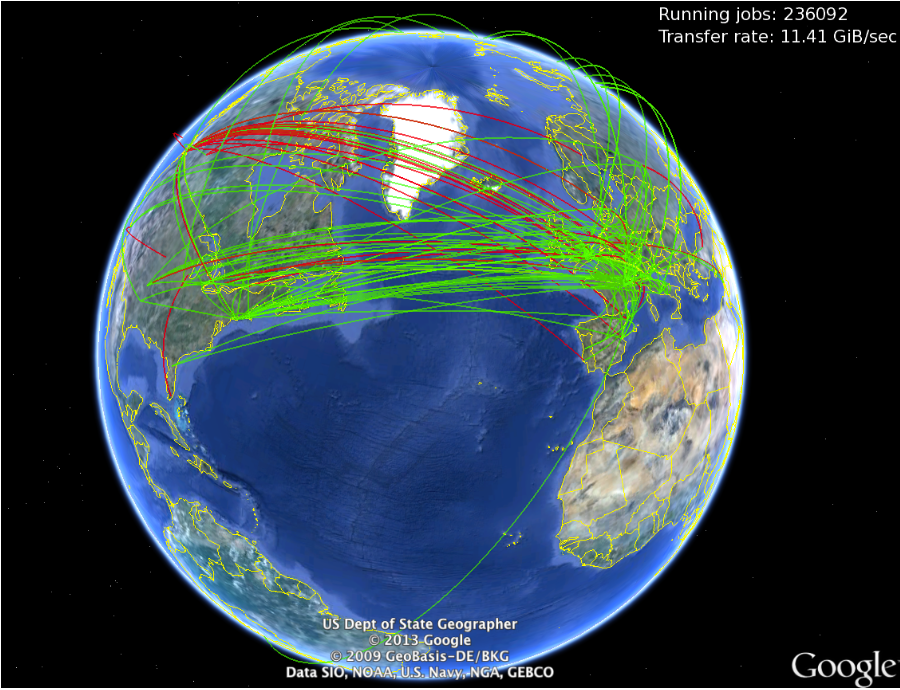

The LAPP contributes to this effort thanks to the MUST platform, of which the ATLAS group is a major user for computing time and especially for storage. The involvement of the team that provides daily operational monitoring in collaboration with the ATLAS group of LAPP made this effort a success, since MUST was the first French regional site labeled as a "nucleus", i.e. sufficiently reliable to host data. MUST also offers a local data analysis platform. This achievement is the result of a mixed team of system engineers and physicists familiar with data placement and computational monitoring tools specific to the ATLAS collaboration.

In 2022, almost 3.8 PB of storage and 4000 processors are used at LAPP by the ATLAS collaboration. MUST also hosts a test infrastructure to deploy beta versions of Grid software components and participate in an R&D program. Software components and grid monitoring tools are increasingly moving towards standard IT industry components.

The infrastructure deployed was sufficiently efficient to carry out the physics analyzes of Run-1 and Run-2. It is ready for the Run-3 campaign but the increase of an order of magnitude in the quantity of data produced at the High-Luminosity LHC (HL-LHC) opens new challenges to manage, with a constant budget and personnel. The ATLAS-MUST team is involved in the R&D DOMA program of WLCG. To optimize the performance of the Grid and minimize the operational cost, the organization of data in datalakes [2] is one of the major choices of this program.

Another request at the laboratory but also at the global level is to extend this type of service to other scientific communities. Its members, in liaison with the local CTA and LSST teams of the LAPP, contribute to the demonstration of the feasibility of the datalake approach through the multidisciplinary project ESCAPE initiated by the European Commission. As part of this program, the sites of four neighboring laboratories (Annecy, Grenoble, Marseille, Clermont-Ferrand) have come together in a federation called ALPAMED [3], which was integrated in 2019 into the ATLAS and ESCAPE data lakes.

Contacts : Stéphane Jézéquel, Frédérique Chollet, équipe MUST, Claire Adam-Bourdarios

Publications et articles :

- Overview of the ATLAS distributed computing system, CHEP 2018 conference proceedings

- Architecture and prototype of a WLCG data lake for HL-LHC, CHEP 2018 proceedings

- Implementation and performances of a DPM federated storage and integration with the ATLAS environment, CHEP 2019 conference talk by the ALPAMED team